As LLMs continue to work their way into more and more aspects of our lives, we find ourselves trying to describe them in terms of the world that came before. Marketers and hypesters front-run our own imagination with depictions of executive assistants, professional mentors, romantic partners (big yikes y’all,) and life coaches. All these motivated metaphors have one thing in common. They seek to position LLMs as a kind of artificial life, with an intelligence of their own, to be commanded, respected, or even loved.

They refer to it as AI, short for science fiction’s artificial intelligence, as a means of tapping into decades of storytelling, and filling their sails with unearned wind. If we were to emerge from Plato’s cave and make untainted first contact with LLMs, we would describe them in much simpler terms. Here is my personal favorite:

It’s a content generator.

I think this was easiest to see when DALL-E and Stable Diffusion were first released. They were image generator algorithms. There wasn’t yet a giant green face built onto the front to misdirect you. You typed in some input, and then it would output an image. Nobody thought that there was a sentient mystic on the other side of the line, drawing the images and sending them back to you.

You could certainly pretend that’s what’s happening, as I did in July of 2022 when I asked DALL-E to draw a family portrait of itself.

Is DALL-E some kind of mongoose because it drew that four times? Is it an orphan because it drew that five times? Is it a half-domino-half-pencil living in infinite darkness?1 It’s none of these things of course, but it’s fun to pretend!

LLMs that give text responses are not any different. They still take a prompt as input, same as DALL-E. But instead of outputting an image, it outputs a blog post.

Blog post generators

In the olden days before LLMs, you were maybe trying to get Node.js installed on your Windows PC, and it wasn’t obvious how to do that, so you had to wait for a human to write a blog post about it. Then you could use a search engine to find that human’s blog post and follow it.

We don’t have to do that anymore. Nobody has to write the blog post. You can go to ChatGPT and type in the same query you used to give to a search engine, and it’s like it clicked “I’m Feeling Lucky” for you and took you straight to the top result.

I’m not trying to undersell how useful LLMs are. This is really friggin’ useful. In those before-times you probably also had the experience of searching for a blog post on how to do this really specific thing you’re trying to do, and nobody had written that blog post yet so you’re out of luck. You’re never out of luck anymore. The blog posts don’t need to have already existed, because the LLM will make them exist on-demand. Blog post generator.

So what is AI then?

Who fucking knows! The obvious take is that fiction writers coined the term, and science has no rigorous definition of either artificial or intelligence, so putting them together is compounded nonsense. But let’s put that take aside and carry on in good faith. Let’s just say that, despite not having a coherent understanding of either word in artificial intelligence, we will know it when we see it.

Are we seeing it? I think no.

Probably the most life-like aspect of LLMs is that we don’t know how they work. We generated a big pile of numbers, and put them in a text file. You give the numbers file to the algorithm, and blog posts come out the other end. The greatest LLM researcher on the planet couldn’t give you a better explanation than that. What do the numbers mean? We don’t know. Nobody knows.

And that makes them a lot like this other blog post generator we can’t figure out – the human brain.

For some, the similarity between number files and brain wrinkles is an awfully big hint that LLMs are giving intelligence. But, isn’t it just as likely to go in the other direction? Maybe whatever LLMs are, that’s the base truth – and humans are like LLMs.

There’s an active debate between free will and hard-determinism for a reason. Nobody knows which one is correct. Maybe I’m just a big pile of numbers, parked at this cafe table with the smells and sounds of coffee at the top of my context window, generating the next sentence of this very blog.

To put it another way, what if we study how LLMs work and end up discovering ego-deflating truths about how our own brains behave? There’s no less chance of that happening than there is finding some emergent God Particle inside of ChatGPT.

So then why is everyone talking about AI?

I hinted at this earlier. The AI narrative was spun by marketers and hypesters with skin in the game. They want your attention, your money, or both. They’re not interested in truth-seeking or level-setting. If calling things AI will kick your adrenal glands into gear, or pry your wallet open, they’ll keep calling it AI.

To make sense of it all, I recommend splitting the question into two and answering them individually. Like so many misconceptions in the world, conflation obscures our senses. Those questions:

- Is there evidence suggesting LLMs are AI?

- What are the implications of having AI in society?

Is there evidence suggesting LLMs are AI?

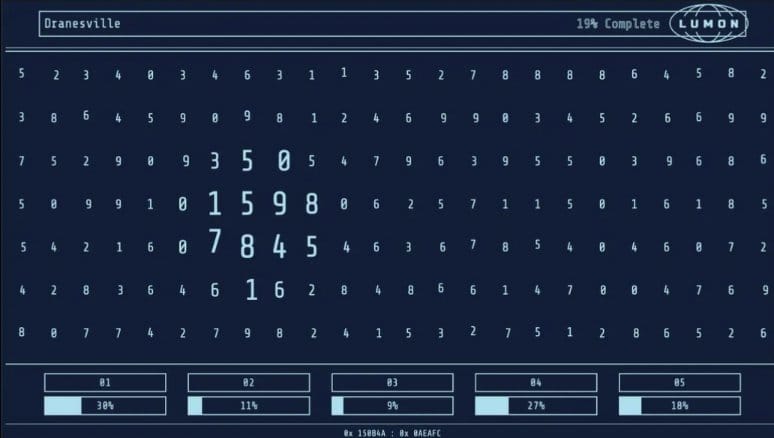

No. LLMs won’t durably pass the Turing Test. They’ll score some early wins within really controlled settings. Call it a first-mover’s advantage. But humans are adaptable. Over time we’ll all come to realize that no human can regurgitate a perfectly formatted recipe for fried chicken at 600 WPM, and then follow up with a biographical summary of Geoffrey Hinton. Passing the Turing Test is more of a tightrope walk than a benchmark. If you’re too booksmart you appear inhuman. Booksmart humans know that better than anyone. There’s even already memes about the telltale signs of an LLM – the em-dashes (oops!), the sycophantic backpedaling when challenged, and the cooking recipes shared in the middle of political debates.

Maybe LLMs are going to be a sub-system of some future AI, but they themselves are not AI. LLMs forget everything you just said to them the moment you launch a new instance. Once they’re trained, the only way for them to store new knowledge is to bring it into their context window. No amount of caching and compressing the new knowledge will change the fact that an LLM has to share its learned experience with its reasoning space. Humans don’t work that way.

There’s other facets of the human experience we haven’t yet seen from LLMs. A more human-like system will need some way to retrain itself after a day’s experiences. That’s the only way to get new knowledge out of the context window and into the numbers file. An AI system is going to have to sleep, just like we do, to pull this off. Even if it’s superhuman polyphasic sleep, they will still sleep.

Humans also only experience time in one direction. If you say something hurtful to your friend, there’s no going back to before you said it. But LLMs work that way. Did you mess up your prompt and get gobbledygook in the response? Hit undo and try a slightly better prompt from your last context checkpoint. The LLM will never know you said the other thing.

I actually recommend that as one of the practical takeaways of this article. People who think they’re talking to an AI will see a bad response from the LLM and write back. “That part is wrong, do it this way.” But why are you wasting context window space with the LLM’s initial bad response? Why risk the parts of its response that you did like decaying from regression-to-the-mean effects? Instead, undo the context window to your last checkpoint and reprompt with stronger guidelines. That’s what people who recognize they’re prompting a content generator do.

What are the implications of having AI in society?

First let’s recognize that some of the outcomes being attributed to AI are outcomes we can achieve today with plain content-generative technology.

We don’t need AI before the jobs start disappearing. Algorithms take jobs all the time. Remember doing sums on a calculator and writing the results down in a ledger? Now spreadsheet formulas do it for you. An entire floor of number crunchers deposed by one guy with Excel. You can go back further. The mechanistic technology from that pesky industrialization era destabilized the entire workforce without the help of any artificial intelligence.

It won’t be AI either that brings the anonymous internet to its knees, so full of bot-generated content that it’s impossible to find or converse with another human. All we need is inexpensive content generation, at the speed of attention. The internet was already full of bots anyway, and now all those bots are LLMs too. This is the premise of the Dead Internet Theory, a once conspiracy-theory turned inevitability.

Last let’s speak briefly of the AI intelligentsia: a sturdy mix of moral philosophers, technocrats, and former neural network researchers. They are the ones clogging up all your podcast feeds with chatter about alignment problems, probabilistic armageddon, and artificial general intelligence. (Our earlier ambiguous words have formed a thruple, how trendy!2) Every last one of these people is Mark Zuckerberg selling you the Metaverse.

Oh how I wish they were clogging the Hollywood script pipeline instead of my podcast feeds! I’d watch like, every single one of these movies. I live in none of them. I live in a world that has a blog post generator.